Build trust into your coding models

Proactively remove systemic flaws from training data to train foundational models that are secure by design.

Large language models are powerful but inherit flaws from their training data. SonarSweep is a service designed to remediate, secure, and optimize the coding datasets used in model pre-training and post-training.

The quality of AI-generated code is tied to the quality of the data LLMs were trained on. Research shows that even a small amount of poor-quality data can disproportionately “poison” a model, leading it to generate buggy, insecure code.

Vast public datasets, the foundation for most LLMs, are a chaotic mix of good code and snippets riddled with bugs and security vulnerabilities.

During training, the LLM internalizes these flawed patterns, unable to distinguish good code from bad. It learns to replicate the same mistakes it was taught.

The LLMs in turn reproduce bugs and vulnerabilities as they generate code, which can make its way into the product and requires rigorous verification.

Generative AI is transforming how we code, but LLMs have a critical limitation: they often produce code with hidden bugs, security flaws, and maintainability debt. For LLM providers and companies who require a higher standard of quality, there is a clear need to fine-tune and customize models. SonarSweep provides the essential data quality layer for:

Build models that are secure and reliable by design by improving the training data at the source, giving their customers a competitive edge in the market.

Develop custom models with confidence in private environments, helping their customers to meet strict compliance requirements and protect sensitive IP.

Create high-performance, cost-effective Small Language Models (SLMs) for specialized agentic workflows on platforms like Databricks and IBM.

Achieve state-of-the-art performance on a budget by optimizing training datasets to build more powerful models with less data and compute.

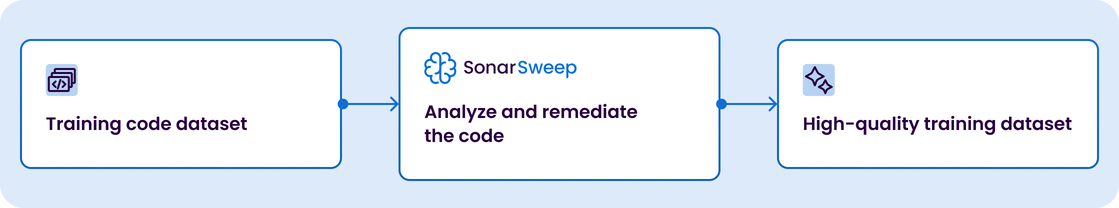

SonarSweep automatically analyzes and fixes thousands of bugs, vulnerabilities, and code quality issues within the training dataset at scale.

A strict filtering process is applied to remove low-quality code. The refined dataset is then balanced to ensure diverse and representative learning for robust model capabilities.

The final, “swept” dataset is an optimized, high-quality asset ready for model training, yielding significant improvement in the quality of generated code.

Proactively remove systemic flaws from training data to train foundational models that are secure by design.

SonarSweep has demonstrated significant improvement in a model’s ability to produce high quality secure code without degrading the functional performance.

SonarSweep leverages Sonar’s industry-leading code analysis engines to automatically process large volumes of training code, remediate issues, and transform flawed data into high-quality training examples.

By fixing code instead of deleting it, we retain valuable learning examples for the model, improving its understanding of complex patterns.

Our engine turns bad examples into good ones, systematically raising the overall quality and security posture of the entire dataset.

Powered by the same analysis trusted by over 7 million developers to secure 700 billions of lines of code worldwide.

SonarSweep is now available in early access. Partner with Sonar to be among the first to build the next generation of safe, reliable, and secure coding models.

4.6 / 5

SonarSweep is positioned as a capability from Sonar that automates codebase housekeeping to elevate overall code quality. It helps teams reduce technical debt, standardize patterns, and keep repositories consistent so developers can focus on delivering features faster while maintaining quality at the source of new code.

By combining automated refactoring suggestions and structured remediation workflows, SonarSweep supports teams in adopting a focus on new code practices that prevent issues from accumulating. It complements SonarQube, SonarQube Cloud, and SonarQube for IDE by turning identified issues into clear, repeatable actions that keep codebases healthy over time.